Artificial intelligence is transforming how decisions are made in everything from credit approvals to healthcare diagnostics. Yet as AI systems become more autonomous, questions of responsibility, fairness, and trustworthiness are more urgent than ever. While model performance continues to accelerate, ethical safeguards have lagged behind. We can no longer afford to treat ethics as a downstream patch to upstream design. Ethics must be integrated into the foundations of AI, not to slow innovation, but to ensure it’s sustainable, accountable, and human-centered.

Transparency: Not Exposure, but Engineering

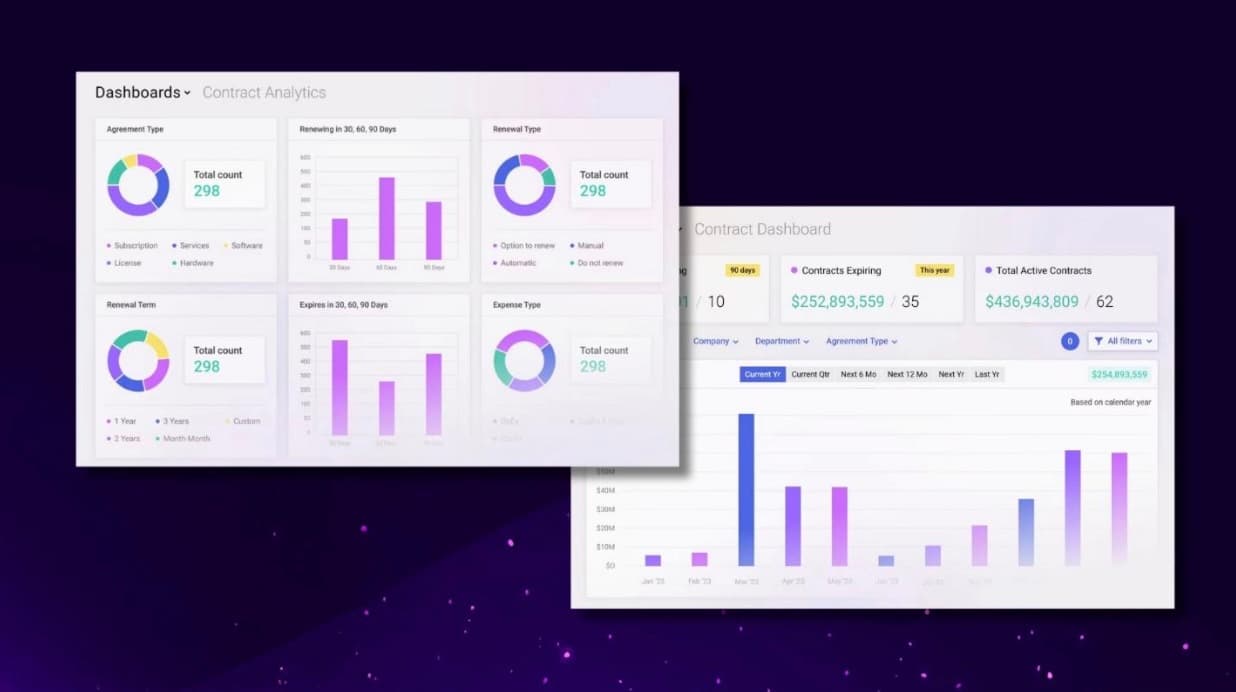

True AI transparency isn’t about revealing proprietary code. It’s about offering structured insight into how systems function and what consequences they produce. Rahul B., who has worked in regulated digital finance systems, argues that explainability and auditability can be embedded “by design”—as they are in compliant financial software. He and Topaz Hurvitz advocate for architectural transparency: using model-agnostic explanations, audit logs, and sandboxed decision visualizations to make complex models interpretable without compromising intellectual property.

Sudheer A. and Niraj K Verma echo this view, emphasizing that explainability must extend beyond technical teams to regulators, auditors, and end-users. Transparency isn’t a disclosure strategy—it’s a system architecture that anticipates accountability.

Bias Is Not a Bug—It’s a Lifecycle Risk

Too often, AI bias is treated as a technical flaw to be fixed by developers alone. But bias is structural. As Ram Kumar N. puts it, identifying and addressing it must be “a team sport” involving engineers, designers, legal teams, and end users alike. Arpna Aggarwal reinforces this point, arguing that bias mitigation is most effective when it combines technical tools, like fairness metrics and synthetic data, with organizational processes such as real-time monitoring and human oversight during deployment.

Amar Chheda introduces a critical nuance: not all biases are unethical. Audience segmentation, for instance, may enhance marketing relevance. However, when such strategies become exploitative, as in the deliberate design of women’s clothing with smaller pockets to promote handbag sales, the ethical boundary is crossed. AI forces us to confront the scale and subtlety of such decisions, especially when the system, not the human, is making the call.

Governance Requires More Than Principles

A common theme across sectors is the need for proactive governance. Srinivas Chippagiri warns against one-off audits, describing bias as a persistent vulnerability that must be monitored like cybersecurity threats. Meanwhile, Dmytro Verner and Shailja Gupta argue for establishing cross-functional governance teams with shared responsibility, spanning model design, legal compliance, and risk assessment. Rahul B. supports this model, describing cross-functional charters that treat bias not as a technical issue but as a strategic design challenge.

Rajesh Ranjan notes that governance is not merely internal; it also includes public-facing mechanisms. Transparency reports, stakeholder disclosures, and third-party audit frameworks are crucial in building public trust. Without visible checks and balances, ethical claims remain aspirational.

A Shared Ethics Framework: Urgent, But Not Uniform

Despite cultural, regulatory, and industrial variation, there is broad agreement on the need for a global ethical framework. Suvianna Grecu likens this to fields like medicine and law, where international standards enable local adaptation without sacrificing ethical consistency. Junaith Haja proposes a set of core principles: fairness, transparency, accountability, security, and human oversight. These could serve as the backbone of any ethics charter, while remaining flexible for sector-specific implementation.

However, as Sai Saripalli and Devendra Singh Parmar caution, ethics cannot be dictated solely by governments or companies. Effective frameworks must be co-created through collaboration between technologists, regulators, civil society, and academia. Industry-driven ethics, while valuable, can rarely hold themselves accountable.

Ethics Must Be Intentional—Not Aspirational

Perhaps the clearest takeaway from the insights shared is that ethical AI must be intentional. As Hina Gandhi notes, creating dedicated “ethics auditors” and institutionalizing responsibility are practical—not theoretical—steps. Sanjay Mood warns that leadership must own ethical outcomes early in the process; by the time flaws are discovered downstream, it’s often too late to correct them without public harm.

This view is reinforced by EBL News and Shailja Gupta, who advocate for decentralized but enforceable structures. Ethical infrastructure must be as robust as technical architecture: regularly audited, transparently governed, and tied to incentives.

Conclusion: The Future of AI Depends on What We Build Into It

AI is not neutral. Every model reflects the assumptions, incentives, and values of its creators. If we design for speed and efficiency alone, we will build systems that amplify existing inequities and obscure accountability. But if we design with conscience—embedding transparency, managing bias, and structuring governance—we can build systems that support human flourishing rather than replace it.

Ethics is not the opposite of innovation. It is what makes innovation worth trusting.