Working in the US tech landscape data and AI professional, I’ve seen one thing repeatedly, companies want to scale AI everywhere, but they often get stuck when it comes to governing it. We can build LLM-powered assistants for marketing, analytics tools for finance, and even AI-driven R&D accelerators, but if we don’t get the governance right, the whole system slows down. Or worse, it becomes a compliance nightmare.

From my experience contributing to AI Time Journal (“From Bottleneck to Force Multiplier: How Data Engineering Powers Responsible AI at Scale”) and sharing ideas on the AI Frontier Network (profile link), the lesson is simple: governance doesn’t have to be a brake. If done right, it can be the engine that lets innovation run safely.

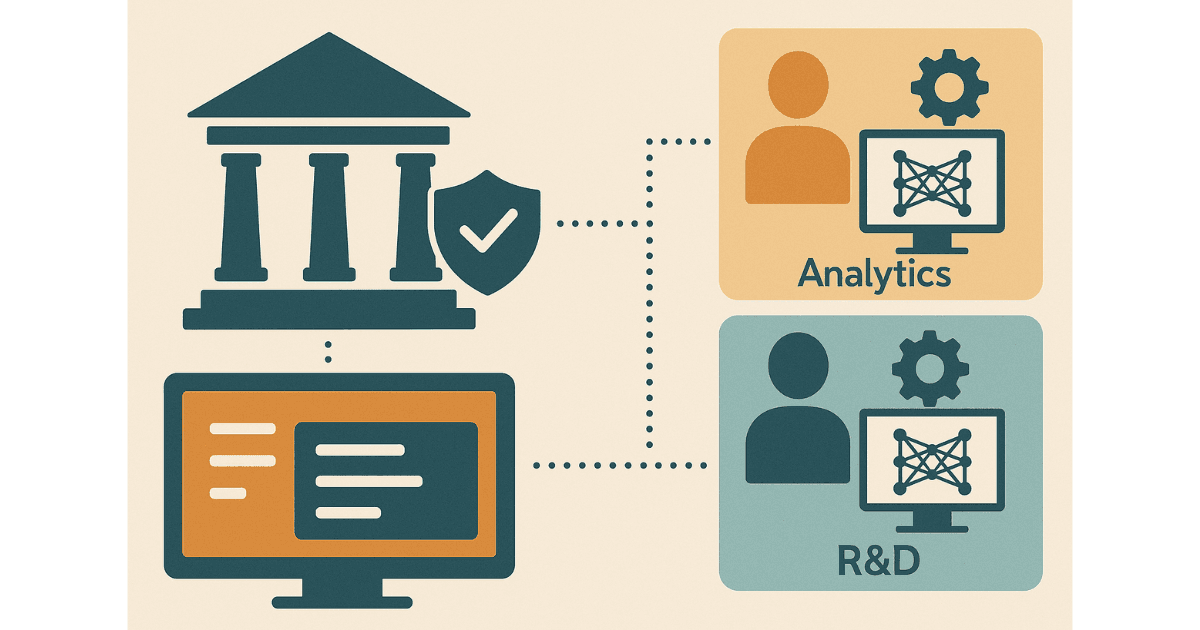

That’s why I advocate for federated governance, a structure where domain teams build, own, and run AI tools while a central AI Trust Office sets the rules, provides the guardrails, and ensures nothing falls through the cracks. This isn’t about adding bureaucracy; it’s about helping teams move fast without risking brand, compliance, or customer trust.

Why Centralized Governance Doesn’t Work Anymore

In the big enterprises, central AI governance teams can’t possibly keep up. Each function, be it a chatbot in retail, a fraud detection model for banking, or a data assistant for operations, needs to evolve quickly. When every change must route through one central gatekeeper, it kills momentum. Teams end up bypassing processes, which is worse for risk.

How Federated Governance Comes Together

Here’s how it works in practice:

AI Council: It creates policies, risk tiers, audit processes, and shared tools for monitoring and testing. They don’t micromanage, but they make sure everyone’s playing by the same rules. Lets say if the business domain is healthcare this AI office sets up rules based on the industry compliance practice like HIPPA.

Domain teams: The individual business units like marketing, data science, operations don’t just consume AI, they build and improve tools themselves using platforms like Amazon Q, and AI-powered IDEs. This helps the business units experiment their idea to develop into a working prototype and then into production. They monitor metrics like bias and drift and make sure their systems stay inside the rails.

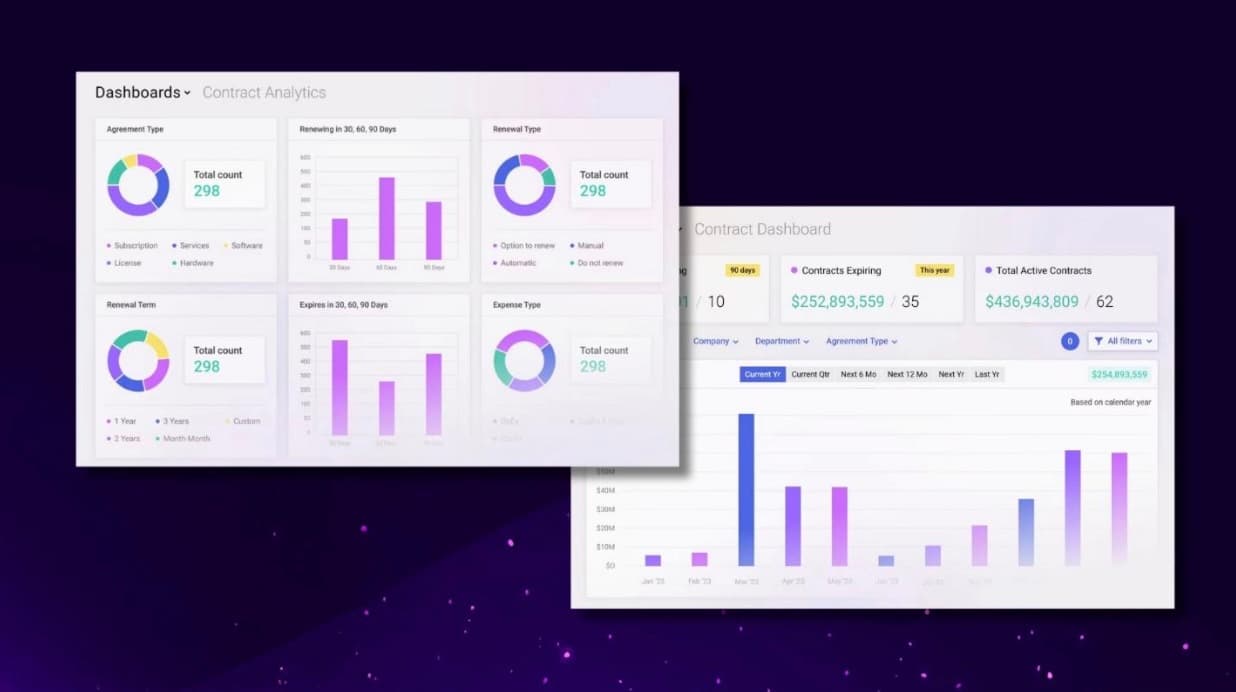

Shared Observability: The shared observability and infrastructure layer ties it all together. Central dashboards, cross-account visibility, and unified logs mean leadership can see what’s happening across the company without blocking teams. Central IT handles account structure, baseline security, and billing, while domain teams keep ownership of their environments.

Why This Matches Today’s AI Business Needs

Speed without Chaos: Teams can build and iterate fast, but with built-in safety nets.

Scale with Confidence: You don’t need to hire an army for a central AI office; the model scales naturally.

Consistency without Red Tape: Common standards keep things aligned, even as each team moves at its own pace.

Empowerment at the Edge: The teams closest to the customer are the ones shaping the tools.

What’s Next

As more companies push AI deeper into their operations, I see federated governance becoming the default model. The trick is to set up a strong central framework, train product teams to run within it, and keep everyone talking. One thing I’ve found helpful is hosting monthly AI tech talks where domain teams and the Trust Office share lessons, showcase tools, and iron out any gaps in approach.

If you do it this way, you don’t just scale AI, you scale it safely, efficiently, and with the people who know the work best driving the change.