{"root": {"type": "root", "format": "", "indent": 0, "version": 1, "children": [{"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "From newsroom algorithms to personalized entertainment streams, AI is rapidly transforming how media is made, distributed, and consumed. It\u2019s not just a new tool\u2014it\u2019s a new framework for storytelling, audience engagement, and operational efficiency. But as media moves faster, becomes more responsive, and scales with automation, a central question persists: how do we preserve ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://www.aitimejournal.com/ai-as-a-creative-catalyst-redefining-human-imagination/52739/", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "truth, trust, and creativity", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": "?", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "We gathered insights from engineers, journalists, strategists, and executives at the forefront of AI and media. Here\u2019s what they\u2019re seeing\u2014and shaping.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"tag": "h3", "type": "heading", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "AI Is Scaling Media Creation and Personalization", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "Across newsrooms, studios, and social platforms, AI is helping media teams do more with less. As ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"mode": "normal", "text": "Shailja Gupta", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}, {"mode": "normal", "text": " puts it, AI is now foundational, from automating tasks to personalizing content in news, entertainment, and advertising. On platforms like Meta and X (formerly Twitter), it powers everything from content moderation to real-time search via tools like Grok.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"rel": null, "url": "https://aifn.co/profile/ganesh-kumar-suresh", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Ganesh Kumar Suresh", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " expands on this: AI isn\u2019t just saving time, it\u2019s unlocking new creative and commercial possibilities. It drafts copy, edits videos, suggests scripts, and analyzes distribution\u2014all in real time. \u201cThis isn\u2019t about replacing creativity,\u201d he writes. \u201cIt\u2019s about scaling it with precision.\u201d", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "That precision shows up in marketing, too. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/paras-doshi", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Paras Doshi", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " sees AI enabling true 1:1 communication between brands and audiences\u2014adaptive, dynamic, and context-aware storytelling. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/preetham-kaukuntla", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Preetham Kaukuntla", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " adds a word of caution: \u201cIt\u2019s powerful, but we have to be thoughtful\u2026 the goal should be to use AI to support great storytelling, not replace it.\u201d", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"tag": "h3", "type": "heading", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "The New Editorial Mandate: Verify, Label, and Explain", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "Automation doesn\u2019t absolve responsibility\u2014it increases it. As AI writes, edits, and filters more content, maintaining editorial integrity becomes a first principle. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/dmytro-verner", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Dmytro Verner", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " underscores the need for transparent labeling of AI-generated content and the evolution of the editor\u2019s role into one of active verification.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"rel": null, "url": "https://aifn.co/profile/rajesh-sura", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Rajesh Sura", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " echoes this tension: \u201cWhat we gain in speed and scalability, we risk losing in editorial nuance.\u201d Tools like ChatGPT and Sora are co-writing media, but who decides what\u2019s \u201ctruth\u201d when headlines are machine-generated? He advocates for AI-human collaboration, not replacement.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "This sentiment is reinforced by ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/srinivas-chippagiri", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Srinivas Chippagiri", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " and ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/gayatri-tavva", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Gayatri Tavva", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": ", who argue for clear ethical guidelines, editorial oversight, and human-centered design in AI systems. Trust, they agree, is the bedrock of credible media\u2014and must be actively protected.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"tag": "h3", "type": "heading", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "From Consumer Insight to Content Strategy", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "AI doesn\u2019t just help create\u2014it helps listen. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"mode": "normal", "text": "Anil Pantangi", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}, {"mode": "normal", "text": " sees media teams using predictive analytics and sentiment analysis to adapt content in real time. The line between creator and audience is blurring, and smart systems are guiding that shift.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "Sathyan Munirathinam", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}, {"mode": "normal", "text": " points to companies like Netflix, Spotify, and Bloomberg already using AI to match content with user preferences and speed up production. On YouTube, tools like TubeBuddy and vidIQ help optimize content strategy based on performance data.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"rel": null, "url": "https://aifn.co/profile/balakrishna-sudabathula", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Balakrishna Sudabathula", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " highlights how AI parses trends from social media and streaming metrics to inform what gets made\u2014and how it\u2019s distributed. But again, he emphasizes, \u201cMaintaining human oversight is essential\u2026 transparency builds trust.\u201d", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"tag": "h3", "type": "heading", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "The Ethical Frontier: Can We Still Tell What\u2019s Real?", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "As AI-generated content floods every format and feed, we\u2019re entering an era where the ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"mode": "normal", "text": "signal", "type": "text", "style": "", "detail": 0, "format": 2, "version": 1}, {"mode": "normal", "text": " and the ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"mode": "normal", "text": "noise", "type": "text", "style": "", "detail": 0, "format": 2, "version": 1}, {"mode": "normal", "text": " may come from the same model. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/ram-kumar-nimmakayala", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Ram Kumar N.", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " puts it bluntly: \u201cWe\u2019re not just automating headlines\u2014we\u2019re scaling synthetic content, synthetic data, and sometimes synthetic trust.\u201d", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "For him, human judgment becomes the filter, not the fallback. The editorial layer\u2014ethics, nuance, intent\u2014must lead, or risk being left behind. ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/profile/anuradha-rao", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Dr. Anuradha Rao", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " offers a path forward: collaborative tools, clear accountability, and regulatory frameworks that prioritize creativity and inclusion.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"rel": null, "url": "https://aifn.co/profile/nivedan-suresh", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "Nivedan S.", "type": "text", "style": "", "detail": 0, "format": 1, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " adds that AI is fundamentally a mirror: it reflects what we prioritize in its design and deployment. \u201cWe must build with transparency, accountability, and editorial integrity, or we risk eroding the very foundation of trust.\u201d", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"tag": "h3", "type": "heading", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "The Future: Human-Centered Media, Powered by AI", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "What\u2019s clear from all voices: ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://www.aitimejournal.com/", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "the future of media", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": " won\u2019t be AI vs. humans\u2014it will be humans amplified by AI. Tools can create faster, analyze deeper, and personalize at scale. But values, truth, empathy, and creativity remain human responsibilities.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}, {"type": "paragraph", "format": "", "indent": 0, "version": 1, "children": [{"mode": "normal", "text": "This future belongs to those who can navigate both algorithms and ", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}, {"rel": null, "url": "https://aifn.co/designing-ai-with-foresight-where-ethics-leads-innovation", "type": "link", "title": null, "format": "", "indent": 0, "target": null, "version": 1, "children": [{"mode": "normal", "text": "ethics", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr"}, {"mode": "normal", "text": ". To those who can blend insight with intuition. And to those who recognize that in an AI-powered media world, trust is the most important story we can tell.", "type": "text", "style": "", "detail": 0, "format": 0, "version": 1}], "direction": "ltr", "textStyle": "", "textFormat": 0}], "direction": "ltr"}}

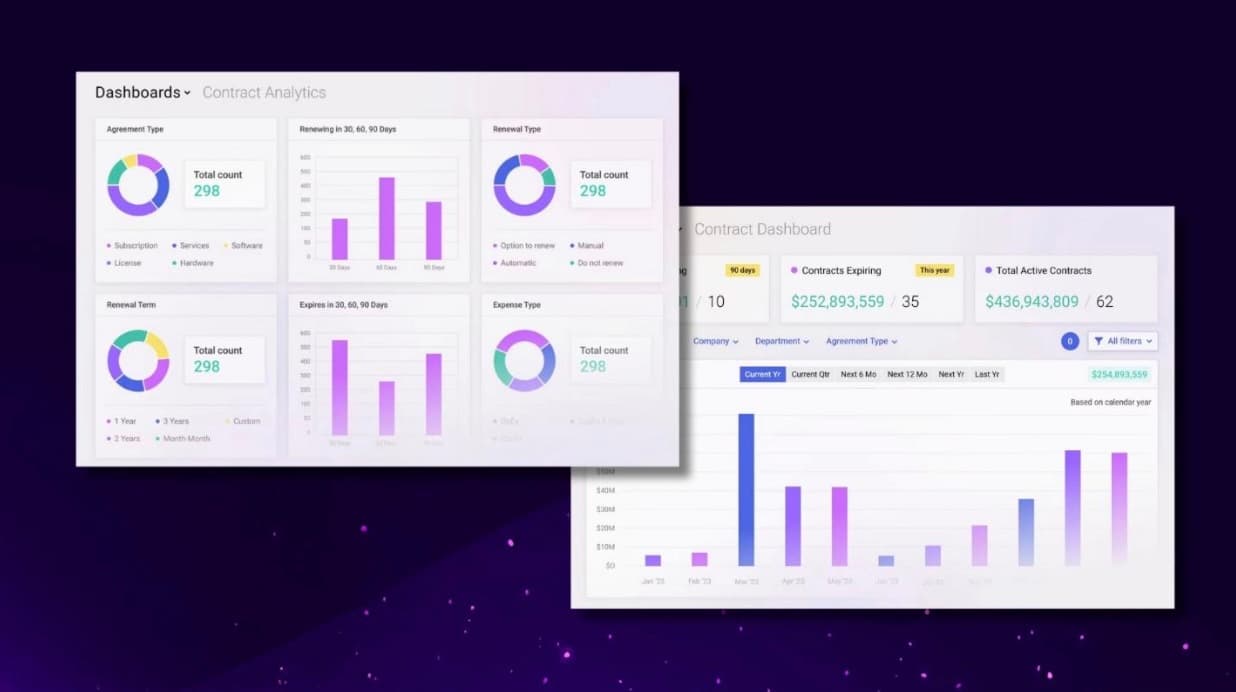

Loading...